How to use Notion as your CMS for your blog in Next.JS

I started using Notion a couple of years ago (in 2021) to manage my YouTube content. I really liked the product and the simple way it allows me to organize my ideas, scripts, personal journal, etc. So, I decided to use it for my personal blog as well.

One of the challenges was to display Notion information on my website. Fortunately, Notion has a Rest API that provides access to all your content. In this tutorial, I'll show you all the necessary steps in case you want to do the same.

I worked on this project in late 2022, at the time my blog was originally powered by Wordpress and I decided to use Next.js for several reasons:

- I wanted to learn this framework, I always build things when I want to learn a new technology or framework.

- It's based on React, so I didn't have to learn that as I've been using React since 2015, that's quite a while.

- It allows generating static sites that can be deployed to Github Pages, important for SEO, and I didn't want to maintain a web server.

- It's quite popular with good documentation, which is essential when seeking help.

I also considered GatsbyJS and AstroJS, but I chose Next.js for its familiarity and the mentioned reasons.

Initializing the Project

Getting started with Next.js is straightforward. I decided to use TypeScript, and I just had to follow the documentation to initiate the project, it's really simple.

Whenever it's up to me, I always choose to use Tailwind CSS for styling. Integrating Tailwind with Next.js is quite simple – just follow the documentation, and in a matter of minutes, Tailwind will be working correctly.

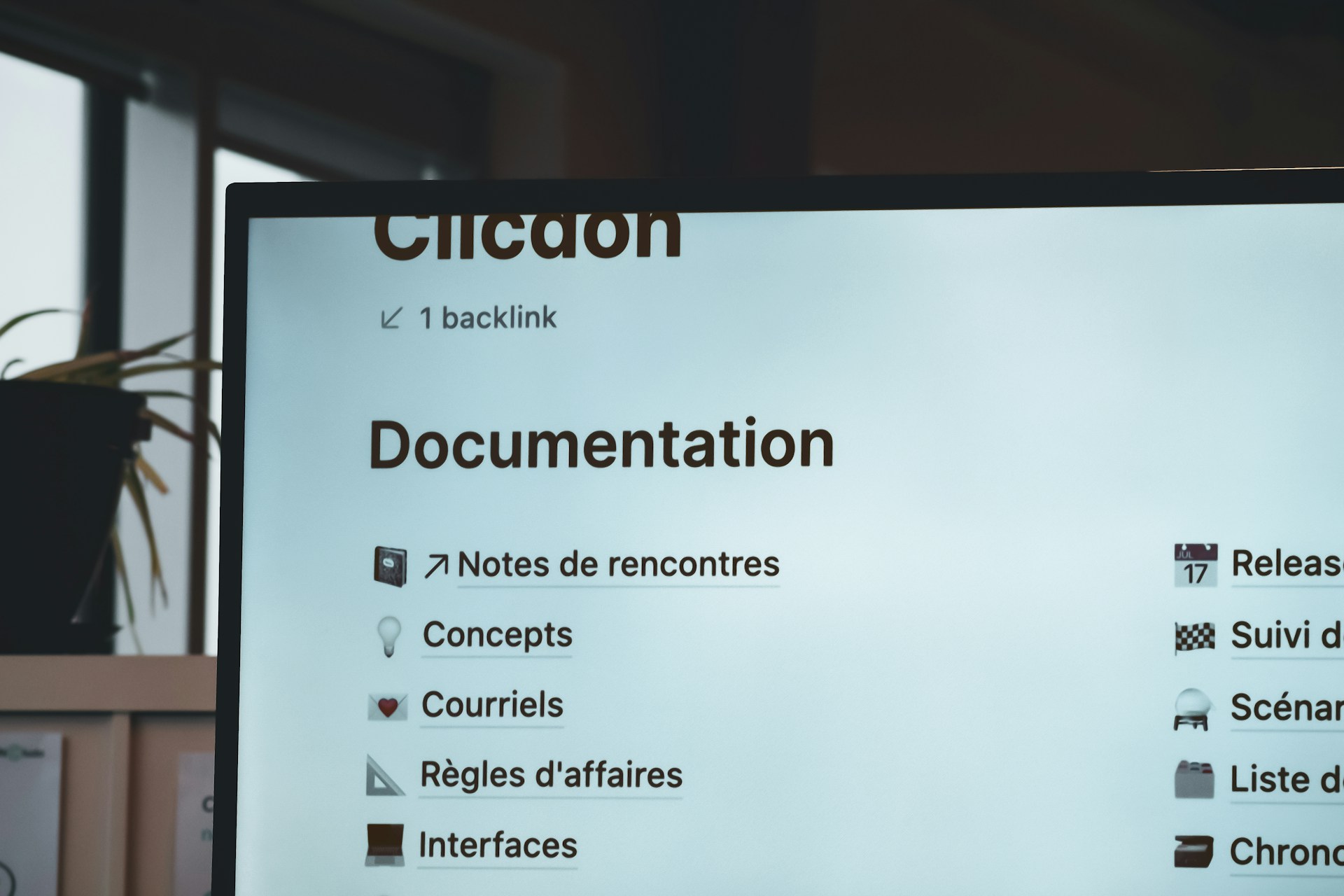

Integration with Notion

The next step is to fetch information from Notion using the available API. To do this, you need to create an integration in your Notion account. I granted it read-only access because I won't be writing anything – this integration is for my blog. Once you create the integration, you'll receive a Secret Key, which you'll use in all your requests to authenticate and access your information.

After creating the integration, you need to assign it to a database. Notion refers to collections/folders as databases for organizing information. Go to the collection, and in the upper-right menu, there's a Connections option. In this section, there's an Add Connection option. You'll see a submenu with various options, and among them, you'll find the integration you created. Select that, and you're done.

Finally, you need the ID of the database. You can obtain this ID from the URL of the collection. For example, the URL of my blog collection is:

https://www.notion.so/crysfel/4b751e1cdfe1471b97912bceee115156?v=38bb03c48f0a42c79b8c84ea4a7c2800

So, the ID of the database would be 4b751e1cdfe1471b97912bceee115156. This ID will be needed later to retrieve all published articles.

Now that you have the Secret Key and the Database ID, you're ready to start calling the API. I recommend using Postman to test that your requests work well.

Reading All Published Posts

For each post, I created a property called Status, which has various values like Idea, Draft, Review, and Publish. I have a nice board where I move cards between these columns.

Now, I want to read only those posts with Status equal to Publish, and I want them ordered by the latest published. Here I had a problem because Notion's documentation is not very clear. It has various endpoints, but I didn't find anywhere how to get the exact information I needed, so I tried them all. It turns out that the database query should be used, and it makes sense once you know that Notion calls collections as databases. However, at that moment, I didn't know that, and it took me a while to figure it out.

The request for this example would be as follows:

POST https://api.notion.com/v1/databases/[DATABASE_ID]/query

Body Payload:

{

"page_size": 20,

"filter": {

"and": [

{

"property": "Status",

"select": {

"equals": "publish"

}

},

{

"property": "Type",

"select": {

"equals": "post"

}

}

]

},

"sorts": [

{

"property": "Date",

"direction": "descending"

}

]

}

As you can see, you can define the number of posts you want to bring for each query, as well as filters. In my case, I have various types of posts like projects, books, etc., so for the blog, I only want to bring those of type post.

Don't forget to send the Secret Key in the Authorization header of the request. Also, it's necessary to send the Notion-Version as part of the headers; otherwise, you'll receive an error. The documentation provides an example.

This request brings all the posts with most of the information, but the most important thing is missing – the main content. To bring the content, you have to make another request for each post to the following endpoint:

GET https://api.notion.com/v1/blocks/[POST_ID]/children

Again, don't forget to send the Authorization and Notion-Version headers. The response of this endpoint will be a JSON with all the content. Each paragraph is a block that can, in turn, have other blocks, depending on how you've formatted the content.

Caching Information Locally

The next problem I encountered was how to save the information locally since I'll use it in several places, and I'll only use it when generating all static HTML. I won't use a web server with Node; it will be all HTML and CSS to make my site fast.

I decided to create a script that queries the Notion API, fetches all the information, and saves a JSON file locally. This way, during development, I would only read the JSON file from Next.js, and that's it – all my content would be available and ready to be used.

The script is quite simple. It makes an initial request to Notion to bring all the published posts. Then, it iterates through that list to make an individual request for each post and bring the main content.

To send requests to Notion, you can use any HTTP client, but I decided to use the Notion SDK. So, I installed the @notionhq/client package. The initial request looks like this:

const Notion = require('@notionhq/client')

const notion = new Notion.Client({ auth: process.env.NOTION_PK })

const response = await notion.databases.query({

database_id: process.env.NOTION_DB_ID,

page_size: PAGE_SIZE,

filter: {

and: [

{

property: 'Status',

select: {

equals: 'publish'

}

},

{

property: 'Type',

select: {

equals: 'post'

}

}

]

},

sorts: [

{

property: 'Date',

direction: 'descending'

}

]

})

Once it responds and I have all the available posts, I iterate through the list and request the details of each item individually. This brings the main content of each post.

const postResponse = await notion.blocks.children.list({

block_id: node.id,

})

const post = {

id: node.id,

title: node.properties.Content.title[0].plain_text,

content: postResponse.results,

createdAt: node.created_time,

publishedAt: node.properties.Date.date.start,

slug: node.properties.Slug?.rich_text[0].plain_text || node.id,

permalink: node.properties.Permalink.url,

tags: node.properties.Tags.multi_select.map(tag => tag.name.toLowerCase()),

image, // <--- TODO: Download this image!!

}

And that's it, I'll have all the information locally! I simply created a new array of posts with the fields I needed and saved the JSON in a text file to use later.

Downloading Notion Images

Another problem is that I have defined images for each post. I select these images directly in Notion, either by uploading one from my hard drive or using the UnSplash widget.

These images are private, and the Notion API returns the URL where they are stored in Amazon S3. However, since they are private, they expire in 30 minutes. Therefore, it's necessary to download them so that they can be served from the server where you will deploy this site – in my case, Github Pages.

Downloading images or files in Node is not complicated. I did it as follows:

const imageName = `${node.id}.${getImageFormat(node)}`

await downloadImage(remoteImage, imageName)

async function downloadImage(url, imageName) {

const filename = `./public/images/original/${imageName}`

// Only download files that don't exist, this is mainly for development only

// when building for production, it will always be a clean instance in the CI

if (!fs.existsSync(filename)) {

const writer = fs.createWriteStream(filename);

const response = await axios({

url,

method: 'GET',

responseType: 'stream'

});

response.data.pipe(writer);

return new Promise((resolve, reject) => {

writer.on('finish', resolve);

writer.on('error', reject);

});

}

return Promise.resolve()

}

As you can see, I downloaded the images into the public folder of the project. When building, all files in this folder will be part of the final result. So, it's important to place the images here so that they can be served when deploying this site to production.

Resizing Images

Another problem I encountered is that UnSplash images are very large, making the site slow on initial load. To optimize this, it's necessary to resize the images to create smaller versions.

const sharp = require('sharp');

const sizes = [

{ name: 'tiny', width: 10 },

{ name: 'tns', width: 500 },

{ name: 'large', width: 1200 },

];

const imageName = `${node.id}.${getImageFormat(node)}`

await resizeImageToMultipleSizes(imageName, sizes)

async function resizeImage(inputPath, outputPath, size) {

// Read the input image file

const image = sharp(inputPath);

// Resize the image for the current size configuration

const resizedImage = image.resize(size.width);

// Save the resized image to a separate output file

await resizedImage.toFile(outputPath);

}

async function resizeImageToMultipleSizes(imageName, sizes) {

// Iterate over each size configuration and resize the image

for (const size of sizes) {

const inputPath = `./public/images/original/${imageName}`;

const outputPath = `./public/images/${size.name}/${imageName}`;

await resizeImage(inputPath, outputPath, size);

}

}

To resize the images, I decided to use a library called sharp. It's quite fast, and the images remain of good quality. Once the images were downloaded and resized, I simply saved the correct URL in the JSON file I have locally.

const remoteImage = node.cover?.type === 'external'

? node.cover?.external?.url

: node.cover?.file?.url

if (remoteImage) {

const sizes = [

{ name: 'tiny', width: 10 },

{ name: 'tns', width: 500 },

{ name: 'large', width: 1200 },

];

const imageName = `${node.id}.${getImageFormat(node)}`

await downloadImage(remoteImage, imageName)

await resizeImageToMultipleSizes(imageName, sizes)

image = {

original: `/images/original/${imageName}`,

large: `/images/large/${imageName}`,

tns: `/images/tns/${imageName}`,

tiny: `/images/tiny/${imageName}`,

}

}

const post = {

id: node.id,

title: node.properties.Content.title[0].plain_text,

content: postResponse.results,

createdAt: node.created_time,

publishedAt: node.properties.Date.date.start,

slug: node.properties.Slug?.rich_text[0].plain_text || node.id,

permalink: node.properties.Permalink.url,

tags: node.properties.Tags.multi_select.map(tag => tag.name.toLowerCase()),

image,

}

And that's it! The integration with Notion is complete. Now, I have the posts locally, along with the images for each one.

Generating Pagination

This took me a while to understand, and I had to ask my friend Manduks what was the best way to do it, because originally, I had thought of a script generating React components for each page. However, that didn't seem like a good idea, although it worked.

Manduks referred me to the getStaticPaths function. This function allows you to query a Rest API or CMS to get all the content you need and generate all the pages you require. This was exactly what I needed. So, instead of querying Notion on each request (because making all those requests and downloading images is slow), and since my site will be static when it goes into production, I decided to read the JSON file I generated previously.

Here, I calculated the number of necessary pages and return them in the paths. Next.js will internally generate all the necessary HTML when building.

const POST_PER_PAGE: number = parseInt(process.env.POST_PER_PAGE, 10)

type Page = {

params: {

slug: string;

}

}

export async function getStaticPaths() {

const paths: Page[] = []

const allPostsJson = await fs.readFile(`./public/data/posts.json`, 'utf-8');

const allPosts = JSON.parse(allPostsJson);

const totalPages: number = Math.ceil(allPosts.length / POST_PER_PAGE)

for (let i = 0; i < totalPages; i++) {

const page: Page = {

params: {

slug: `${i + 1}`,

},

}

paths.push(page)

}

return {

paths,

fallback: false,

};

}

Once NextJS knows how many pages it needs to generate, the next step is to provide the information that each page will have. For that, we use the getStaticProps method as follows:

export async function getStaticProps(context) {

const page: number = parseInt(context.params.slug, 10) || 1

const allPostsJson = await fs.readFile(`./public/data/posts.json`, 'utf-8');

const allPosts = JSON.parse(allPostsJson);

const startIndex = (page - 1) * POST_PER_PAGE;

const endIndex = startIndex + POST_PER_PAGE;

const posts = allPosts.slice(startIndex, endIndex)

return {

props: {

posts,

params: {

...context.params,

paginator: {

total: Math.ceil(allPosts.length / POST_PER_PAGE),

current: parseInt(context.params.slug, 10) || 1,

}

},

tags,

},

}

}

Here, the crucial part is extracting the parameter from the context object. With that, we can calculate the current page and send the necessary posts for that page. Additionally, information for the paginator is included.

And there you have it! With this, you can use the appropriate information. The next step is to use React to render the information, but that is beyond the scope of this tutorial, as there are plenty of tutorials available on that topic.

To deploy to production, simply run three commands in the terminal:

$ yarn blog

$ yarn build

$ yarn export

The first command is the script we created earlier, the one that fetches all the information from Notion and downloads the images to your local machine. The second and third commands are NextJs scripts to generate the static site.

In a future tutorial, I will demonstrate how to automate deployment with Github Actions to deploy to Github Pages. This way, you can trigger deployment automatically when a push is made to the master branch or manually from the Github interface.

What do you think of my integration? Feel free to send any questions via Twitter.

Happy coding!

Did you like this post?

If you enjoyed this post or learned something new, make sure to subscribe to our newsletter! We will let you know when a new post gets published!